Performance II

In my last post about “Performance“, i did talk about a ZFS tuning parameter: zfs_prefetch_disable. And that was a ZFS read parameter…

In this post, we will take a look in another one, with the same “water to wine” effect. This time with focus on writes…

We have a set of informations available for our clients on our storage solution, so many performance related info can be verified. So, for some reason, a few storages were showing big latency times for write requests. And we were not talking about so many write requests…

Well, writes were to be served by the slog device, and so should be very fast. Indeed, we were hitting the SSD’s and the latency times were good in this stage. But the data must go to the disks (really?), and there, things(asvc_t) were not so good (+100ms, +150ms, +200ms) while in spa_sync. And with that “low” performance, we were having commits much more frequent than 30 seconds.

Next step was look into the workload, and see if we were talking about a really random write workload. Using the iopattern Dtrace script, we could see that while in spa_sync, we were facing 99% of random writes, and so, not writing much to the disks (90MB/s, 100MB/s for the whole pool). The question was: What is random for the iopattern? ;-)

Actually, i did want to know if the size of the seek was considered, and the answer were (as always), on the manual (the D script itself):

# An event is considered random when the heads seek. # This program prints the percentage of events # that are random. The size of the seek is not # measured - it's either random or not.

RTFM and answered.

One possible answer could be ZFS fragmentation, but just 10% of the pool was used.

We do not have the answer for the problem, but we need to consider what we have (some good points):

– A few NFS writes per second;

– The slog device working just fine (good latencies);

– A short interval between spa_syncs (less than 10 seconds);

– 99% random writes during spa_sync;

– Big latency times on discs (+100ms/asvc_t )

– Pool empty (10% used);

– A few (big files) being updated (something like a hundred);

My bet here was that: the system is complicating itself with that simple work (i do that most of the time too). The spot is that we have (again) a busy disk but for the wrong reason. That reminded me a great blog post about I/O reordering and disk queue depth. And an important phrase:

“The disk IO sub-systems are built to provide maximum throughput“…

From that post we can get this usefull script (sd for scsi) too:

fbt:sd:sdstrategy:entry,

fbt:sd:sdstrategy:entry

{

start[(struct buf *)arg0] = timestamp;

}

fbt:sd:sdintr:entry,

fbt:sd:sdintr:entry

/ start[(this->buf = (struct buf *)((struct scsi_pkt *)arg0)->\

pkt_private)] != 0 /

{

this->un = ((struct sd_xbuf *) this->buf->b_private)->xb_un;

@[this->un] = lquantize((timestamp - start[this->buf])/1000000,

60000, 600000, 60000);

@q[this->un] = quantize((timestamp - start[this->buf])/1000000);

start[this->buf] = 0;

}

Running that on a storage with the problem, we could see thousands of operations that took ~200ms. A really bad scenario…

And a really important information (here you will know the zfs tunable), the ZFS default vdev_max_pending for these storages were the old 35. And to understand the whole history about LUN queue, here there is another great post.

So let’s stop talking and see if my guess is true. To do that, the simple procedure is to limit the queue to 2, so we know that will be just one active I/O and another waiting (without reordering of any sort). If that do change the results, we are in the right direction, if not, back to the lab.

echo zfs_vdev_max_pending/W0t2 | mdb -kw zfs_vdev_max_pending: 0x23 = 0x2

Let’s look at the iostat… and… Bingo! Lantency times down to a few ms, and running the above Dtrace script again, all operations like 5ms, 10ms!

So, that was just to see if we were in the right direction, but change something from 35 to 2 is radical. Then, let’s put it to 10 (the actual value in ZFS implementation), and see if we have a good performance as well…

echo zfs_vdev_max_pending/W0t2 | mdb -kw zfs_vdev_max_pending: 0x2 = 0xa

Good! Not so good as 0x2 (with vdez_max_pending=10 we did see times like 30ms), but now we need some time to understand the whole impact (eg. reads), and configure a good(definitive) number for this workload.

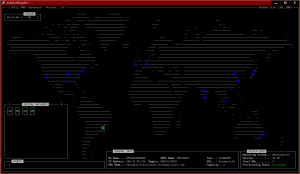

OBS: So, one more Dtrace script for the utilities folder, and here another one.

peace